Supercomputers have been absolutely essential for four scientists from DTU Wind. Together they have more than 50 years of experience in using high performance supercomputing for their research in Meteorology, Climate and Wind Energy studies.

They have used both local, national and international HPCs, but have primarily used the HPC facility called SOPHIA based at DTU in their research. The SOPHIA system is also part of the national DeiC Throughput HPC.

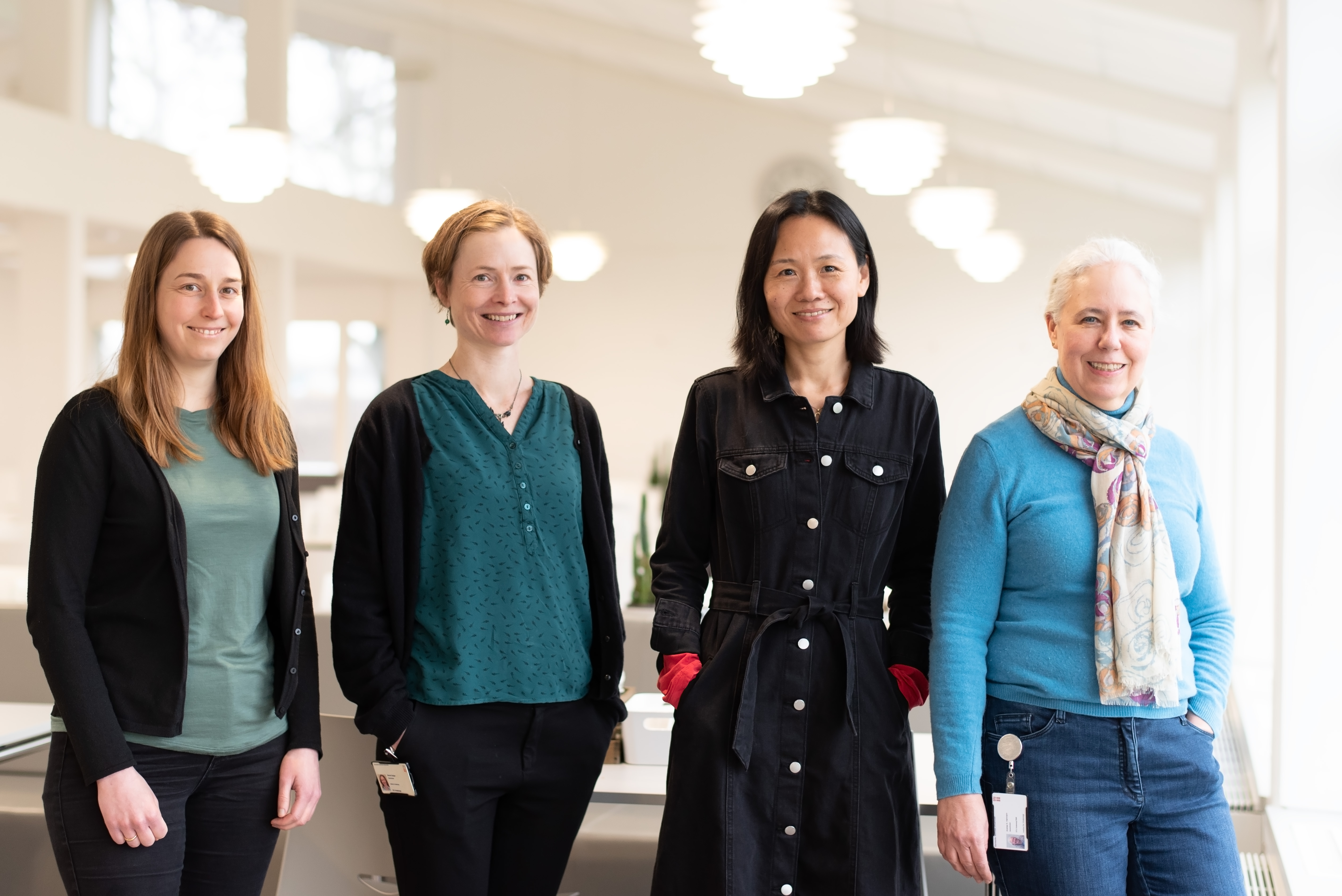

The four scientists: Andrea Hahmann, Merete Badger, Jana Fischereit and Xiaoli Guo Larsen all do research in cutting-edge wind energy technology for a sustainable future.

Scientists from DTU Wind (from left to right): Jana Fischereit, Merete Badger, Xiaoli Guo Larsen and Andrea N. Hahmann.

The whole value chain within wind energy can be explored

Researchers and companies can test everything from nano-scale materials and large-scale structures to fully powered demonstrations of turbines as DTU Wind Energy operates some of the world’s most advanced research infrastructures. Furthermore, satellite observations and atmospheric modelling are used for wind energy applications, so the whole value chain within wind energy can be studied.

“I think our institute is one of the very few places in the world that has this broad knowledge in one place, and the strength is to be covering all scales from materials to the mapping of global winds. The capacity of finding an expert in anything, when you need it, is really useful,” says Andrea Hahmann, senior scientist at DTU Wind Energy.

“Denmark has been a pioneer within wind energy as society encouraged this kind of innovative ideas and created an efficient system ahead of others. For instance the creation of the first European Wind Atlas and the design of turbines that really nobody else in the world was doing more than 30 years ago,“ says Xiaoli Guo Larsén, senior scientist at DTU Wind Energy.

Peter Hauge Madsen, Head of Department, DTU Wind Energy, states: “Our field requires that we are completely up-to-date with being able to model the atmosphere and the large constructions that modern wind turbines are. For that, HPC is absolutely crucial.”

European Wind Atlas created using supercomputing

A wind atlas was designed to provide a mesoscale and microscale wind climatology for Europe and Turkey. The joint European project was in 2018 allocated 57 million core hours on the HPC MareNostrum4 in the Barcelona Supercomputer Center (BSC) via PRACE, and 99% of the resources were used during six months. MareNostrum4 has approximately 165.000 processors.

Map of mean wind speed at 100 m above ground level for Northern Europe as displayed by the NEWA website. PRACE resources were used for the calculations.

“NEWA, the New European Wind Atlas, is essentially a database of wind conditions for the European domain, and it can, for instance be used to determine which areas in Denmark have suitable conditions for the installation of wind farms,” says Andrea Hahmann.

“A total of six petabytes of raw data was produced during the simulations in the PRACE cluster. It wasn't possible to carry out the computations on SOPHIA, which has only approximately 15.000 CPUs, as the computational burden was too large. In addition, we needed a single computer to avoid introducing differences between local calculations. At the end of the day, the NEWA could not have been performed without supercomputing,” she says.

“In the future, such calculations will be done using coupled atmosphere and wave models increasing further the required computational resources,” says Jana Fischereit, also a researcher at DTU Wind Energy.

Essential to have storage and processing capacity

It is important to have enough storage space and processing capacity for the whole chain of data processes. Wind data generated from satellite observations was at the beginning stored on local disks, later switching to storage at local server facilities. These facilities have been upgraded several times over the years as the amount of available satellite data increases constantly.

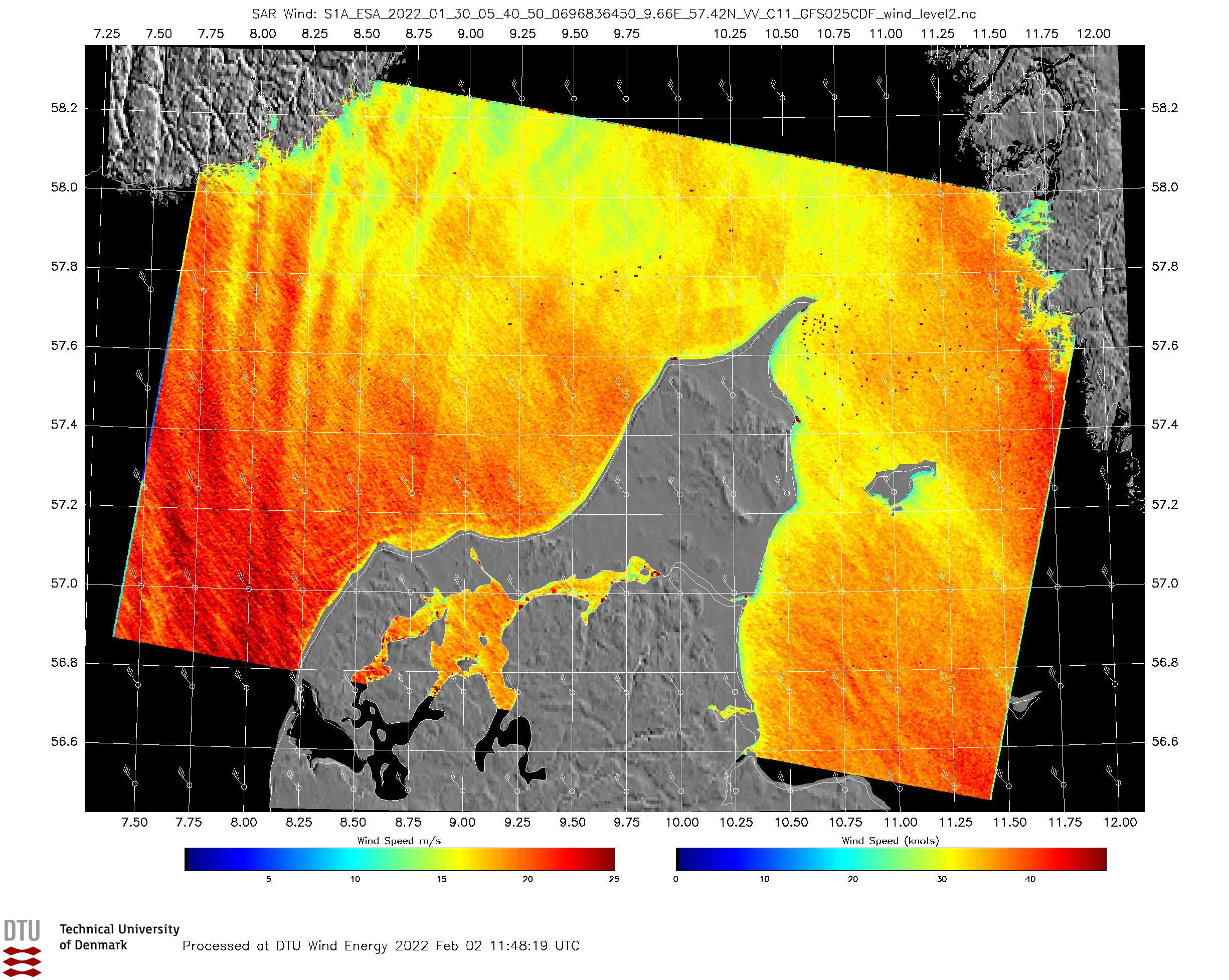

“In my research, we collect satellite observations of radar signals that are returned from the sea surface, and these observations can be converted to maps of the ocean wind speed. Data is processed on SOPHIA in near real-time, meaning data is downloaded from the Copernicus Open Access Hub every day, and wind maps are published online within 24 hours,” says Merete Badger, Senior scientist at DTU Wind Energy.

“Right now, we participate in another European project, where we explore Data Information Access Services (DIAS). The next big step is to evaluate the use of cloud services, where satellite data from Copernicus could be more readily available and eliminate download procedures. The advantages and challenges for taking such a next step using international platforms will be mapped during the next year to help us make a proper decision on future data handling,” she says.

Satellite-based wind map of the storm Malik (30-01-2022 at 5:40 UTC) converted using DeiC Throughput HPC.

There are challenges to using new infrastructures

All four scientists agree that it still is an individual effort to onboard supercomputing and that it would be helpful with more guidance. The “word-of-mouth” approach in research communities evolves the HPC competencies in many cases. When it comes to challenging areas within HPC, the topics of documentation, requirements for security, software and data management are highlighted. Each HPC infrastructure has its own specific challenges, and the administrative data burden is piling up.

“Requirements for security has increased, which of course is good for avoiding erasing data and giving the right people administrative rights to data, but it also means that it is not as effortless to access data anymore,” says Xiaoli Guo Larsén.

“When I work at home on my PC, I use another operating system on the computer, and I have to update the software every two weeks, which is time-consuming, but I understand they want security first,” she says.

“It can be time-consuming to manage the access rights whenever a new colleague needs to access a data set, as it may take some time to figure out which group they should be part of or which directories they should have access to and setting it up correctly,” says Merete Badger.

“We try to build some documentation of best practices. However, there is not assigned a specific time for this, so you do it like in-between-tasks, and maybe it could be managed in a better way,” says Jana Fischereit.

“Computational capacity, memory and transfer time can also be bottlenecks,” says Andrea Hahmann.

“During the NEWA project, the biggest obstacle was the data transfer, as it took as long to transfer the data from the BSC cluster to our machine here in Denmark as it took to generate the data. Sometimes, the flow stopped. If we couldn't transfer data, we couldn't continue running. The computations took about six months, and the data transfer was nearly the same," she says.

“In the future, there will probably be even higher competition for HPC resources, and it may not be manageable from our individual sites to provide the right support for accessing both national and international HPC,” says Andrea Hahmann.

Create learning environments and provide online training

The amount of research data increases more than ever, and competence development for accessing and using HPC facilities may be a limiting factor for a new user.

“As a new HPC user, you might be a bit scared at the beginning to do some work because you are worried that you're interfering with someone else’s work. If you are in a safe training environment where nothing can be removed, that could help get people started,” says Jana Fischereit.

“It could even be nice with a list of what you should not do using HPC,” says Xiaoli Guo Larsén.

“Yeah, for example, how to avoid submitting 2000 jobs without knowing one has done it,” says Andrea Hahmann laughing.

“It could be beneficial with a short online course on how to get started using HPC. I know in practice it's hard to keep such a course updated, but just knowing the basics could maybe help when onboarding larger systems where many users are enlisted,” says Merete Badger.

“Yes, and specific courses for introducing new scientists to HPC such as master and PhD students,” says Andrea Hahmann.

Envision this for the future: You are an HPC pilot entering an online simulator, taking a basic online certificate before you onboard your world-class HPC facility and “take-off” guided by a fantastic support “tower” that will help you during your many research quest endeavors! Maybe it is not so far away. DeiC is in 2022 working on a virtual SLURM simulator, as one of several initiatives, that may serve as a learning environment for new HPC users.

Background

All four scientists are from DTU Wind Energy. Merete Badger is a Senior scientist in the group called “Remote sensing and meteorology”, and she is educated as a geographer from the University of Copenhagen. Since her PhD, she has worked with satellite data, and she is an experienced user of the HPC facility SOPHIA. Andrea N. Hahmann and Xiaoli Guo Larsen are also Senior scientists. Andrea is educated as a meteorologist from the University of Utah, and she has used HPC since her PhD modelling thunderstorms in the Amazon. She has used local/national (SOPHIA) and international HPC (US and Spain). Xiaoli has a PhD in marine boundary layer meteorology from Uppsala University. She has been using SOPHIA to simulate storms (wind and waves) and calculate extreme wind and turbulence. Jana Fischereit is a young researcherwith a PhD in meteorology from the University of Hamburg, simulating the ecosystem of the atmosphere,the waves and the ocean and the effect on wind farms. She has usedHPC in DK and Germany.

Scientific output

DeiC continuously monitors who uses the national and international HPC resources, and this article is inspired by the online publication list that hosts more than 1300 publications. A total of 255 publications included international HPC, and researchers from DTU Wind Energy were co-authors on three of these publications. You can explore the recent work by following the specific links below.

References

- Murcia, J. P. et al. (2022) “Validation of European-scale simulated wind speed and wind generation time series”. Applied Energy. DOI: 10.1016/j.apenergy.2021.117794.

- Fischereit, J. et al. (2021) Comparing and validating intra-farm and farm-to-farm wakes across different mesoscale and high-resolution wake models. Wind Energy Science [preprint], in review. DOI: 10.5194/wes-2021-106.

- Larsén, X. G. and Fischereit, J. (2021): A case study of wind farm effects using two wake parameterizations in the Weather Research and Forecasting (WRF) model (V3.7.1) in the presence of low-level jets. Geoscientific Model Development. DOI: 10.5194/gmd-14-3141-2021.

- Larsén, X. G. et al. (2021) Calculation of Global Atlas of Siting Parameters. DTU Wind Energy. DTU Wind Energy E No. E-Report-0208. DOI: https://orbit.dtu.dk/en/publications/calculation-of-global-atlas-of-siting-parameters.

- Dörenkämper M. et al. (2020) The making of the New European Wind Atlas - Part 2: Production and evaluation. Geoscientific Model Development. DOI: 10.5194/gmd-13-5079-2020.

- Hahmann A. N. et al. (2020) The making of the New European Wind Atlas - Part 1: Model sensitivity. Geoscientific Model Development. DOI: 10.5194/gmd-13-5053-2020.

- Hasager C. B. et al. (2020) Europe's offshore winds assessed with synthetic aperture radar, ASCAT and WRF. Wind Energy Science. DOI: 10.5194/wes-5-375-2020.

- Badger, M. et al. (2019). Inter-calibration of SAR data series for offshore wind resource assessment. Remote Sensing of Environment. DOI: 10.1016/j.rse.2019.111316.