It all started slightly by chance, says Kristian Sommer Thygesen, professor at DTU Physics. We knew that large GPU-based exascale supercomputers would become an important element in the future HPC landscape and thereby for much of our research, but we were a bit cautious nonetheless. We wanted to look into it a bit more. We were in touch with CSC in Finland in connection with a different project [NOMAD, ed.], and we were porting our electron structure software code, which we use to calculate materials with, over to AMD GPUs. Part of the NOMAD project involved developing workflow software for exascale computers, which makes it possible to control a large number of calculations at the same time. This is needed when using a supercomputer such as LUMI, which can run 100,000 calculations simultaneously with 10 calculations on each of the 10,000 GPUs. The obvious choice was to test the workflow software on LUMI to see if the software could really be scaled up to such a large computer.

LUMI Grand Challenge

We were contacted by CSC who gave us the chance to run on LUMI for 6 hours, i.e. at full capacity. We didn't even know that this was an option. The only thing was that we only had 14 days to prepare. “I honestly didn't think it was possible," Kristian says with a wry smile. He discussed it with his team, but they didn’t think we’d be able to make it. And yet, they couldn't say no to the challenge, so we went for it and pulled out all the stops. But it wasn't without its challenges. During these 14 days, LUMI was actually down for a relatively large part of the time, making it difficult to test our codes properly. My team of five people literally worked day and night to get everything set up. There are a large number of components that need to work together when you’re running real production on the third largest computer in the world. There was our simulation code, GPAW, which had only just been made GPU-ready. Then there was the workflow manager, the database with the 100,000 materials to be analysed, i.e. the scientific part of it, and finally the handling of the Lustre file system on LUMI. Fortunately for the process, both DeiC, DTU Front Office and the experts at CSC in Finland were very helpful and quick to respond to our enquiries.

In the Zone

There were lots of technical challenges during the Grand Challenge execution that had to be solved in a very short amount of time. I was over in the 'control room' every now and then to check in with the team and see how things were going, but I couldn't get in touch with them at all. I had brought them sushi at the institute on Saturday, where they were up until midnight making the final preparations. It was very exciting and also motivating for all of us, even though we didn't get as much out of it scientifically as we had hoped for, because the first four hours were actually spent solving some technical issues related to a software library that had been updated on LUMI.

However, everything worked during the last hour, in which we confirmed that our workflow software really worked and could scale to exascale (or 0.5 exascale to be precise). It worked and we ran 50,000 calculations simultaneously on LUMI.

Pictured is Kristian Sommer Thygesen with his team of developers consisting of Mikael Kuisma, Jens Jørgen Mortensen, Ask Hjort Larsen and Tara Boland.

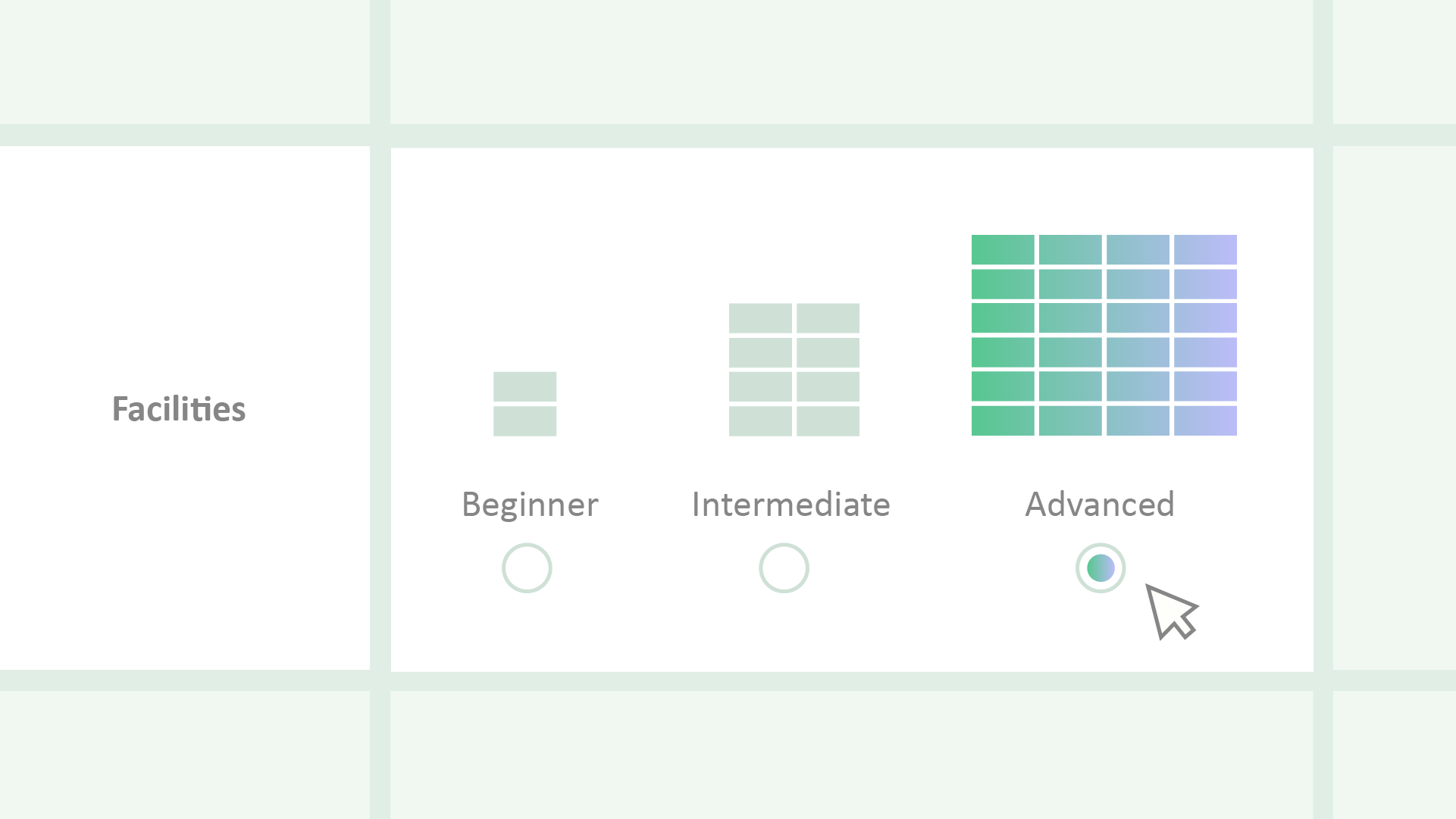

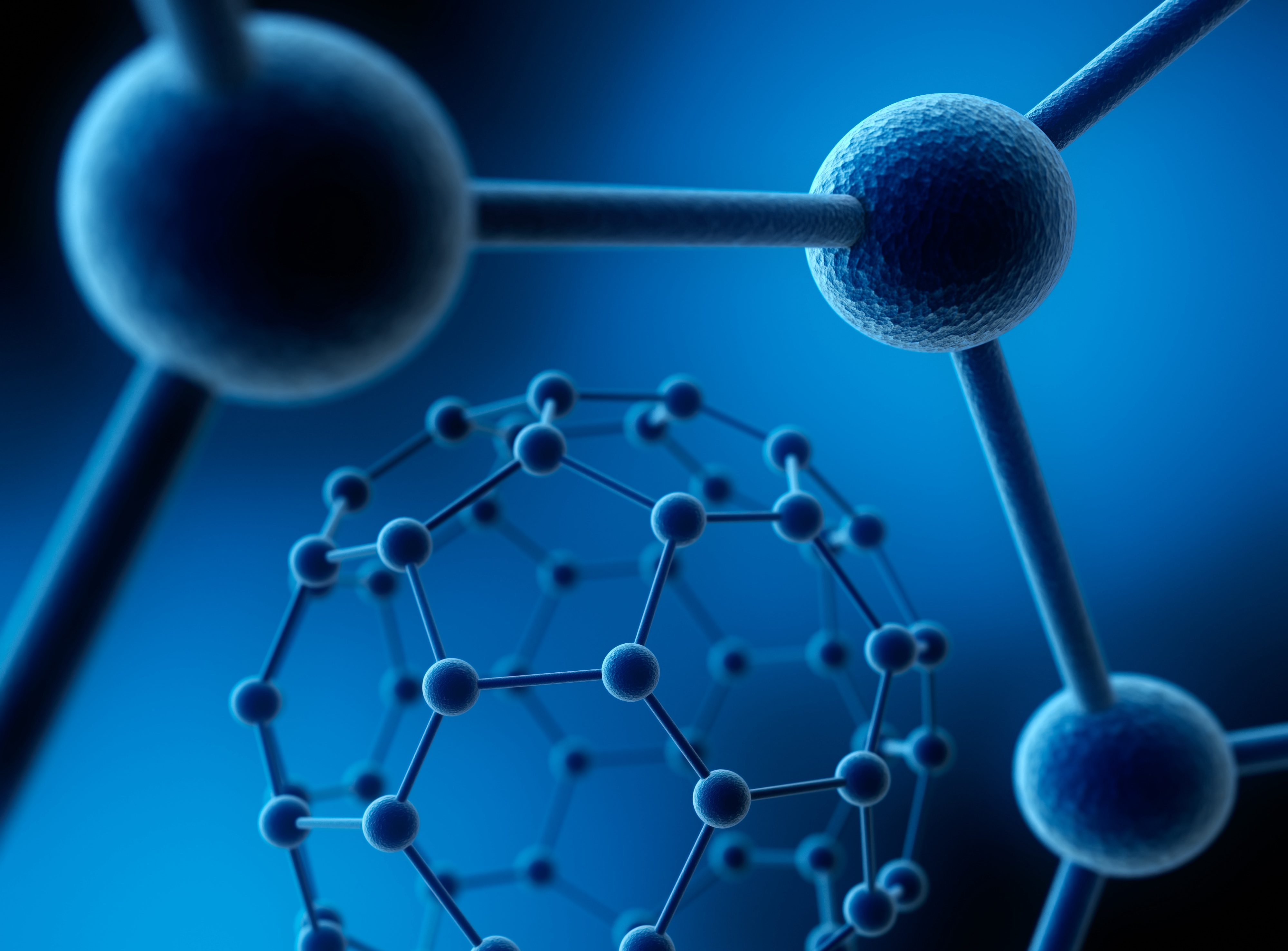

Calculations based on quantum mechanical principles require HPC

We know the atomic structure of around 200,000 crystalline materials, but for the vast majority of them we do not know their basic mechanical, electronic, optical or magnetic properties, and thus their application potential remains unknown. Instead of performing traditional and time-consuming laboratory tests to identify the properties of the many materials, we can now perform calculations to learn about the properties of the materials, such as their mechanical strength or their ability to absorb light. These types of calculations are based directly on quantum mechanical equations, which makes them very accurate, but this requires massive HPC resources. So far, we have only performed our calculations on the DTU supercomputer Niflheim, which is a CPU facility, because we haven’t had access to large GPU facilities. This is also one of the reasons why we haven't started the process of moving our code to the GPUs earlier. The access to LUMI is a game changer for us.

The LUMI supercomputer can accelerate our research into materials for solar cells and catalysts

The use of HPC in materials research is not new. The basement at DTU Physics houses the Niflheim supercomputer. We could have run our calculations on this, but LUMI just happens to be 100 times faster. In addition to performing materials calculations, we also research the development of better simulation methods. This is partly about achieving a higher degree of accuracy under completely idealised conditions, but also about getting closer to reality where conditions are never entirely ideal. In the long term, our plan is to combine our calculations with artificial intelligence (AI) in order to accelerate the entire research process.

Artificial Intelligence (AI) helps discover entirely new materials

When: 6 hours on March 22, 2023

Allocation: 60,000 GPU hours

Researcher: Kristian Sommer Thygesen

Team of developers: Mikael Kuisma, Jens Jørgen Mortensen, Ask Hjort Larsen and Tara Boland

The idea is to use AI to guide our calculations towards the materials that are most interesting, for example within the development of solar cell materials and catalysts. We want to find better catalysts for electrolysis and solar cells that can be thinner and more flexible. Besides determining the properties of the materials already known to us, which was the purpose of the LUMI Grand Challenge project, there is a need for discovering completely new materials because the bottleneck is often in the materials that we have available (that we know of). In principle, there are many materials that could be produced if we mix the right elements in the right proportions and under the right conditions. This is where the combination of AI and the quantum mechanical calculations done on supercomputers is really the way forward. We're actually running on LUMI right now (June 2023, ed.) because we didn't get to use all our GPU hours, and we're now getting all these materials analysed. All the results, including metadata which will make the data FAIR, for example, will be put into a database accessible online.

LUMI has become a regular guest at DTU Physics

The main goal and challenge of the LUMI Grand Challenge was not to perform the calculations themselves, but to scale the calculations to utilise the 10,000 GPUs on LUMI. Now that we have evaluated the experience and had time to implement and improve the software, we feel well prepared to use LUMI more regularly. There are lots of resources that we can tap into, of course, and we will do this in combination with our own supercomputer, Niflheim. We just need to get into a flow now where we remember to apply for the LUMI resources, which we will then probably use for more strategic projects. As already mentioned, I have been a little reluctant to use GPU/LUMI myself because I thought it would be too demanding to port code etc. But when we realised that this was manageable and that there was excellent help available, I would say that you should just jump into it. In the long run, it's a good idea to get started and possibly get some help with it, because things are moving in that direction with big GPU-based steps!

LUMI can realise bigger projects and bigger ideas

With LUMI resources, we can think in terms of bigger projects and bigger ideas. We can address scientific challenges that don’t just entail lots of calculations with lots of materials mapped out, but also for example calculate more complex properties for these materials.

"Simply put, we can get closer to reality with a higher degree of accuracy in our calculations because we can include more factors in the calculations. Reality is complex," says Kristian Sommer Thygesen, DTU Physics.

LUMI is a supercomputer on a different level than what we normally call supercomputers in Denmark.

Kristian Sommer Thygesen

Researches atomic-scale design of new materials using HPC, and is known for the development of a comprehensive database of two-dimensional (2D) materials that not only shows the crystal structure of the materials, but also their basic mechanical, electronic, optical and magnetic properties which benefits research into new materials for e.g. solar cells, catalysts and quantum light sources.

Resources

1. LUMI supercomputer: https://www.lumi-supercomputer.eu

2. Apply for computational ressources on LUMI: https://www.deic.dk/en/supercomputing/Apply-for-HPC-resources

3. Full machine run on LUMI, find out more here: https://docs.lumi-supercomputer.eu/runjobs/scheduled-jobs/hero-runs/